|

Ethics: Artificial Intelligence:

The Benefits and Pitfalls of Emotion AI in Human-Machine Communication: An Ethical Observation

by Assoc. Prof. Dr. Anna Rostomyan Former HR Manager at Porsche Center Yerevan PhD Research Fellow at the University of Fribourg, Switzerland

and Dr. Armen Rostomyan After Sales Director at Porsche Center Yerevan Senior Researcher at Yerevan State University, Armenia

Link for Citation Purposes: https://bwwsociety.org/journal/archive/emotion-ai-human-machine-communication.htm |

With the vast advancement of emerging technologies, the landscape of human-machine communicative interactions is changing as well. There is a need to have a closer look at human-machine cooperation, which this article attempts to do, taking into account both advantages and disadvantages of these very current advancements.

According to Nagy (2018), Artificial Intelligence (AI) is a science that is used to construct intelligence using hardware and software solutions. It is inspired by reverse engineering, for example, in the way that neurons work in the human brain. Our brain consists of small units called neurons, and networks of neurons called neural networks. According to the same author, beyond neural networks, there are many other models in neuroscience that can be used to solve real-world problems in artificial intelligence.

Moreover, according to Marr (2021), developing an Artificial General Intelligence, which possesses a deeper level of understanding, is how many experts believe machines can one day experience human emotions as we do. Artificial General Intelligence (AGI) opposed to Narrow Intelligence refers to the ability of computers to carry out very many different activities, like humans. Thence, Artificial Narrow Intelligence (ANI), as the name itself suggests, aims at completing individual tasks, but with a high degree of efficiency and accuracy.

When we talk about emotional and social intelligence, forms of intelligence that are not necessarily related to a set task or end goal, these fall under Artificial General Intelligence. AGI aims to replicate our qualities, which seem to us automatic and inborn. They are not tied to an end goal, we do them just because we do, which is not the case with machines, since they have to have integrated programs to act like that. Yet, nowadays machines can not only perceive and decode human emotions, but can even replicate them (Rostomyan, 2024a). Thus, it is also firmly believed that by means of further developing artificially intelligent machines, which are even more equipped with some further EI/EQ (Emotional Intelligence) soft skills, like empathy, machines can replace humans sometime in the coming future, which is already happening in some industries as healthcare, marketing, HR, etc. (see more in Rostomyan et al., 2024).

As we have seen, the concept of AI is not new. The idea of creating an intelligent being was proposed and discussed in various ways by different writers and philosophers centuries before the computer was even invented. Let us throw a glance at its earlier conceptions and concepts. Finlay and Dix (1996) describe that the earliest writers imagined their “artificial” beings created from stone: the Roman poet Ovid wrote a story of Pygmalion, the sculptor, whose statue of a beautiful woman was brought to life through the sculptor’s imagination: the musical “My Fair Lady” is the more recent rendition of this fable, as is the play “Pygmalion” by the Irish playwright George Bernard Shaw, named after the Greek mythological figure. Much later, in the age of industrial machines, Mary Shelley had Doctor Frankenstein manufacture a man from separate components and bring him to life through electricity. Other films, such as “Terminator”, present a vision of cyborg machines almost indistinguishable from humans. The same can be observed in the film series of “Transformers” movies directed by Michael Bay, where some of the main characters are endowed with AI and, moreover, they have the ability to transform, as well as read and respond to human emotions in a human-like manner as is nowadays the case with emotion-aware machines (Rostomyan, 2024a).

Moreover, early philosophers also considered the question of whether human intelligence can be reproduced in a machine. In 1642, Descartes argued that, although machines could possibly one day be conceived as animals, they could never pass as humans. He went on to identify his reasons for this assertion, namely that machines lack the ability to use language and the ability to reason. Although, this statement can be refutable nowadays. Thus, to understand the concept of Emotion AI, we have first of all to refer to the notion of EI/EQ, namely Emotional Intelligence, which is our ability to perceive, understand, manage and use our emotions depending on the situational context at hand (Goleman, 1995), which enables both humans and machine to communicate and cooperate efficiently, harmoniously, peacefully, and productively.

Although the name might be confusing, Emotion AI does not refer to a “weeping computer”, which has had a bad day and/or bad week at work either. Emotion AI, also known as Affective Computing dates back to 1995 and refers to the branch of Artificial intelligence, which aims to perceive, process, understand, and even respond to human emotions in human-machine communicative interactions. The technology aims to improve natural communication between man and machine to create an AI that communicates in a more authentic and empathetic way. Thence, if AI can gain emotional intelligence, maybe it can also replicate those emotions in the process of human-machine communication, interaction, and cooperation (Rostomyan, 2024a), which is already happening in very many different fields.

Emotion AI, otherwise referred as Affective computing, began in 1995 in a MIT Media Lab when cameras, microphones, and physiological sensors gathered affective responses to identify human emotions, and then machines responded to those very emotions. This early work led to lab professor Rosalind Picard publishing the book “Affective Computing” in 1997. Today, a machine’s adeptness at evaluating data can help it pick up on subtle emotive nuances that some humans would miss (Marr, 2021). In 2009, Rana el Kaliouby, and Picard founded “Affectiva”, an emotion AI company based in Boston, which specializes in automotive AI and advertising research. With customer’s consent, the users’ camera captures their reactions while watching an advertisement. Using “Multimodal emotion AI”, which analyses facial expression, speech, and body language, they can gain complete insight into the individuals’ different moods. Their 90% accuracy levels are thanks to their diverse data test-sets of 6 million faces from 87 different countries used to train deep learning algorithms. Thus, from a diverse data set, the AI will learn which metrics of body language and speech patterns coincide with various human emotions, feelings, and thoughts in communication (Marr, 2021). This can make, for example, make marketing strategies far more effective allowing marketers have deeper insights into the consumers feelings, emotions, expectations, and preferences (Rostomyan et al., 2024).

As already mentioned earlier, “Affectiva”, an emotion recognition software company, helps advertisers and video marketers gather moment-to-moment facial expressions when watching a video with its product Affdex for Market Research and Data Analysis. This data is compared to the company’s emotion database, and benchmarks for sales lift, brand recall, and more to give its customers such as Kellogg’s and CBS ideas to optimize their content, product, branding strategy, marketing, and even their media presence. The company is also helping the automotive industry figure out ways to use artificial emotional intelligence to transform the transportation experience, including road safety, as well as smooth and enjoyable passenger experience. This includes advanced driver state monitoring solutions to identify an impaired or tired driver. Another company supporting marketers with artificial emotional intelligence solutions for market research is "Realeyes”, which helps leading brands, media companies, and tech platforms make smarter decisions, boost advertisement performance, verify digital identity, and make customer experiences more human. They use webcams, computer vision, and artificial intelligence to analyze viewers’ facial expressions when watching their videos. In fact, “Realeyes” is capable of giving critical feedback regarding the effectiveness of the creativity used in advertisement campaigns that helps companies, such as Coca-Cola and Pepsi, be more effective with their marketing strategies and generate more sales (Marr, 2021; Rostomyan et al., 2024).

As for Microsoft, it has even a whole team dedicated to developing new technologies that promote emotional resilience and psychological well-being and use artificial emotional intelligence to sense and respond to emotional states. The so-called HUE (human understanding and empathy) team is challenged with bringing artificial emotional intelligence to Microsoft products, specifically in the areas of empathetic search, human understanding, and creating more adaptive work spaces (Rostomyan, 2024a). Furthermore, to ensure healthier and better human capital, smooth human-machine interactions are very beneficial in achieving net-zero policies in various fields (Rostomyan, 2024b).

Emotion AI can, furthermore, even be a form of assistive technology for people with autism who often struggle to pick up on the emotional cues of others when communicating. A wearable device could, thus, help those with autism “read” emotions of others and then respond appropriately to the other person’s needs in accordance with their experienced positive/negative emotions.

Now, let us have a closer look at some of the possible benefits and pitfalls of Emotion AI in Human-Machine communicative interactions.

The Benefits of

Emotion AI in Human-Machine Communicative Interactions

As we have seen, Emotion AI, also known as Affective Computing, refers to technologies capable of recognizing, interpreting, and responding to human emotions. Its integration into human-machine communication offers several significant advantages briefly discussed below:

1. Enhancement of

User Experience

By enabling systems to detect and adapt to users’ emotional signals and states,

Emotion AI facilitates more natural, intuitive, user-friendly, and

user-centered communicative interactions. This emotional capacity contributes

to increased user interest, satisfaction and engagement.

2. Improved

Communication Effectiveness

Emotion AI enhances

communicative efficiency by identifying emotional signals such as confusion,

anger, resentment, frustration, irritation, fear,

glee and/or enthusiasm. This allows systems to adjust their

responses accordingly and dynamically; thus, minimizing misunderstandings,

ensuring smooth human-machine communication, and optimizing information

exchange.

3. Adaptive

System Behavior

Emotionally-aware systems can tailor their communicative strategies in real

time based on users’ immediate affective feedback. Such adaptability supports

more context-aware, emotion-aware and responsive interactions, fostering a

sense of interpersonal relevance and enhanced interaction.

4. Applications

in Mental Health and Emotional Well-being

Emotion AI shows promise in the domains of psychological health and emotional

support. By identifying early indicators of emotional distress, irritation

and/or stress at the workplace, these systems can facilitate timely

interventions and psychological support, thereby supporting therapeutic and

preventive care practices.

5. Enhanced

Customer Service and Support

In commercial contexts,

emotion-aware virtual agents can manage customer interactions with greater

sensitivity and emotional intelligence, leading to improved service quality,

generating interest, building trust, and maintaining increased customer loyalty

and brand satisfaction (see more in Rostomyan et al., 2024).

6. Facilitation

of Human-Machine Rapport

By simulating aspects of

human emotional intelligence, machines can foster greater trust, empathy,

care, support, and relationship building. This contributes

to a more socially acceptable and emotionally resonant form of human-machine

communicative interaction, where humans feel seen and heard (see more in

Rostomyan, 2024b).

7. Support for

Accessibility and Inclusivity

Emotion AI can provide communicative support for individuals with emotional

recognition challenges (e.g., individuals with autism spectrum disorders,

social anxiety, and/or even depression), thereby enhancing accessibility and

enabling more inclusive human-machine interaction paradigms.

As we have seen, Emotion AI bring with it very many advantages. Nonetheless, as everything in this life, Emotion AI can have both advantages and shortcomings, benefits and pitfalls that currently shape the landscape of human-machine communicative interactions.

The Pitfalls of Emotion AI in

Human-Machine Communicative Interactions

Despite its potential, the integration of Emotion AI into human-machine interactions presents several critical challenges and limitations as well briefly discussed below:

1. Emotion

Recognition Accuracy and Cultural Bias

Emotion AI systems often rely on facial expressions, voice, or physiological

signals to detect and interpret human emotions. These methods can sometimes be

imprecise and culturally biased, leading to misinterpretation and reduced

reliability across diverse user populations in different contextual situations.

2. Contextual and

Cultural Limitations

Emotions are deeply

contextual and culturally mediated. Therefore, Emotion AI may fail to account

for these subtle nuances, resulting in oversimplified or sometimes incorrect

emotional attributions, especially in cross-cultural or ambiguous situations.

3. Privacy and

Ethical Concerns

The collection and analysis of emotional data raise significant ethical issues

too, particularly concerning user consent, data security, data privacy, and

potential misuse of sensitive affective information for manipulation and/or

surveillance.

4. Lack of

Genuine Empathy

While Emotion AI can

simulate empathetic responses, it lacks “true” emotional care and deeper

understanding. This can lead to superficial or sometimes inappropriate

communicative interactions, especially in emotionally sensitive contexts, such

as mental health support, since their “compassion” is programmed, not

merely genuine as with their human-counterparts.

5. User

Overreliance

Users may overestimate

the capabilities of Emotion AI, mistaking it for genuine empathy or

understanding. This “humanization” can lead to misplaced trust and

unrealistic expectations. Furthermore, when used for decision-making, AI agents

are prone to over-relying on heuristics, amplifying learned biases, or

overlooking ethical implications in their outputs (Hamilton, 2025). Besides,

recent research shows that overreliance on AI technologies for medical care can

be limited or misleading, too.

6. Technological

Limitations and Ambiguity

Emotion detection technologies often struggle with ambiguous or mixed emotional

expressions, especially when multiple modalities (e.g., facial, verbal, and

vocal signals) are present, resulting in various mixed system responses, since

we humans might ourselves sometimes be unaware of our truly felt emotions to be

able to describe to machines correspondingly for response accuracy (Rostomyan,

2024a).

7. Legal

Regulatory and Standardization Gaps

The rapid development of Emotion AI has outpaced legal regulatory frameworks,

leaving significant gaps in legal standards, ethical oversight, and

accountability mechanisms. Though the “EU AI Act” has been accepted to regulate

the emerging technologies in the European Union (EU), there is still much to be

done to regulate the AI use across countries and cultures in various situations

(Rostomyan, 2024b).

The aforementioned aspects come to suggest that though there are very many benefits of integrating Emotion AI into the emerging technologies, the field still presupposes various challenges and limitations as well, which should also be taken into account for successful, harmonious, and efficient human-machine communicative interactions.

Furthermore, in human-machine communication, Elhajjar (2025) states that chatbots like “Replika” are marketed as a solution for loneliness, offering conversations with an artificial “friend”, who listens and responds empathetically to the needs of the humans. Yet, while these bots can in some cases be truly supportive, they cannot give genuine emotional understanding, true empathy, or the complexity that comes with real human-human relationships and communicative interactions. Many individuals seeking emotional support from AI chatbots may feel heard, yet research explored the use of AI chatbots in providing emotional relief states that while they may help in the short term run, they lack the capacity of engaging in very long and deep emotional exchanges with their human-counterparts. As a matter of fact, AI chatbots are designed to “simulate” conversation, but they may fall short of offering the true emotional connection that humans actually need for mental fitness. Thus, the emotional emptiness left by AI-driven interactions may in some cases eventually lead to deeper feelings of isolation and even enhance the feeling of loneliness. Thus, while these chatbots can be really helpful in moments of acute loneliness, they cannot fully replace the nuanced and reciprocal nature of human relationships (Elhajjar, 2025).

This can bring us to the ultimate assumption that in case we want to experience the vast spectrum of very vivid positive emotions in our communicative interactions (both in human-human and human-machine settings), we should still cling to human-human interactions that still have the utmost chance to help us experience the thrill of such happy hormones as oxytocin, serotonin, and dopamine. Thus, we should prioritize human connections for our very own sake to ensure our psychological and emotional well-being.

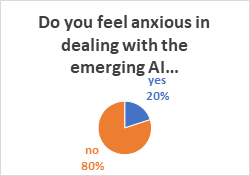

To reveal if humans feel anxious while interacting with the emerging AI technologies, we have interviewed 50 people in our in-person interactions across Europe during the years 2022-2024, and the results come to suggest that the majority of the interviewed (40 respondents making up 80% of the interviewed) feel comfortable using AI in their daily tasks, while only 10 respondents (20% of the interviewed) expressed feeling anxious using AI.

Diagram: AI comfortable use of Humans

Source: Rostomyan research (2022-2024)

The Diagram above comes to suggest that most likely the advancement of Emotion AI might have made human-machine communicative interactions more enjoyable and trustworthy, making it for humans far easier to communicate with machines. In the survey, it has also been detected that the younger generations find it easier to interact with AI. Nonetheless, with the introduction of Emotion AI in various fields of human activity, the elder generations too enjoy communicating with AI technologies.

Yet, as we have seen in our earlier discussions, though the field of Emotion AI is very promising indeed with very many advantages for better human-machine communicative interactions, the field still encompasses various pitfalls as well that should also be taken into account when communicating with emotionally-aware emerging AI technologies.

Summary

In summary, Emotion AI embedded technologies designed to detect, interpret, process, respond to and even replicate human emotions are very exciting. They really have the utmost potential to significantly transform human-machine communication. Among its key benefits, Emotion AI enhances user experience by enabling emotionally responsive and adaptive enjoyable interactions. It contributes to more efficient communication, supports mental health applications, helps strengthen marketing strategies, and improves customer service by simulating empathy and relational sensitivity. Furthermore, it fosters accessibility for individuals with communication difficulties and strengthens human-machine connection through emotionally intelligent efficient cooperation and enjoyable human-machine communication experiences.

However, as we have seen in this article, these advancements are accompanied by various notable pitfalls as well, which should also be taken into account when engaging in human-machine communications. As a matter of fact, emotion recognition technologies often struggle with inaccuracies, mostly concerning cultural-based biases, and sometimes contextual oversimplification, which can lead to misinterpretation of human emotional states and feelings. Moreover, ethical concerns, including privacy violations, data breaches, and the potential misuse of sensitive affective information, are also prominent here. Additionally, users may develop unrealistic expectations or over-trust these systems, which can lead to human-machine communication breakdowns. Technological limitations in detecting complex or mixed human emotions, alongside the absence of comprehensive regulatory frameworks, further complicate the responsible deployment of Emotion AI across various industries.

To sum up with, while Emotion AI holds considerable promise for enriching and improving human-machine communicative interaction, its implementation must be approached with much caution, critical oversight, and a commitment to ethical and culturally-aware use therein.

References

1. Elhajjar, S. (2025). “The Emotional Toll of Over-Digitalization: Preventing “Emotional Shattering” in the Digital Age”, The European Business Review, July 3, accessed on: 12.08.2025, available at: https://www.europeanbusinessreview.com/the-emotional-toll-of-over-digitalization-preventing-emotional-shattering-in-the-digital-age/

2. Finlay, J. and A. Dix (1996). An Introduction to Artificial Intelligence. Boca Raton: Taylor and Francis Group.

3. Goleman, D. (1995). Emotional Intelligence. New York, Toronto, London, Sydney, Auckland: Bantam Books.

4. Hamilton, M. (2025). “The Flawed Assumption behind AI Agent’s Decision-making, The European Business Review, July 6, accessed on 12.08.2025, available at: https://www.europeanbusinessreview.com/the-flawed-assumption-behind-ai-agents-decision-making/

5. Marr, B. (2021). “What is Artificial Emotional Intelligence?”, accessed on: 15.12.2022, available at: https://bernardmarr.com/what-is-artificial-emotional-intelligence/

6. Nagy, Z. (2018). Artificial Intelligence and Machine Learning Enhancements Fundamentals: Develop Real World Applications Powered by Latest AI advances. Birmingham: Packt Publishing.

7. Rostomyan, Anna and Armen Rostomyan (2023). The importance of emotional capital in companies. Int J Res Hum Resour Manage, 5(2), pages 53-57. DOI: 10.33545/26633213.2023.v5.i2a.150

8. Rostomyan, Anna (2024a). “Insights into Emotion Detection with EI Tools and Its Applications through Artificial Intelligence (AI) in Human-Machine Interactions”. Proceedings of the 1st BSBI International Conference on Artificial Intelligence (AI), Volume 2(1), Special Issue of the Scientific Journal of Human and Machine Learning, Berlin School of Business and Innovation (BSBI), Berlin, Germany.

9. Rostomyan, Anna (2024b). Fostering Human Capital through Emotional Labour for Sustainable Human–Human-Machine Cooperation in Achieving Net-Zero Policies. In: Singh, R., Crowther, D. (eds) Transition Towards a Sustainable Future. Approaches to Global Sustainability, Markets, and Governance. Springer, Singapore, pages 139-162. DOI: https://doi.org/10.1007/978-981-97-5756-5_7

10. Rostomyan, Anna, Rostomyan, Armen and Ibeh Lawrence (2024). “The Vitality of Thick Data through Emotion AI in Successful Decision-Making and Problem-Solving Processes for Efficient Marketing Strategies in Sustainable Business and Organizational Operations", International Journal of Managerial Studies and Research (IJMSR), 12(10), pp. 1-15. DOI: https://doi.org/10.20431/2349-0349.1210001.